RSVR: Experimental Virtual Reality in Rust

Introduction🔗

Virtual Reality is an interesting concept but all current implementations (at least the ones that I found) are inaccessible, either due to their dependency on non-free software (e.g. Google Cardboard) or price (e.g. HTC Vive).

I decided to start my own project, called RSVR (Rust VR), with the following goals in mind:

- Uses inexpensive or already-owned hardware

- No dependencies on non-free software

With these restrictions in mind, it seemed quite clear that the most accessible route would be to make use of existing head-mounts for mobile phones, such as those used with Google Cardboard. I went for an Intempo-branded VR head-mount that was available for about 10 pounds at a relatively-local bargain shop.

Because these head-mounts require mobile phones, I will eventually need to get visuals on an Android mobile phone. I should note that Android development is not something that I have done in about 4 years and it is also something I would like to avoid, because I hope to cease usage of Android in the next year or so! (After all, I want to clear RSVR's dependency on non-free software, which all Android phones I am aware of use. I also find the platform lacking in flexibility.)

Proof of Concept🔗

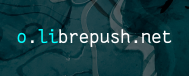

To test the feasibility of the concept, I first modified one of my university graphics course's practicals to render stereoscopically, yielding this visual:

When viewed in a gallery application on a phone, my eyes were able to focus on it, though I could feel that tweaks would need to be made for comfort. Still, this was a good sign, so I decided it would be fine to start the RSVR project.

Research🔗

The head-mount I purchased did not come with any parametric information, but I did find a QR code for a different Google Cardboard viewer online.

It turns out that these QR codes contain a goo.gl URL shortener URL, which then redirects to a Google URL which contains a base64-encoded parameter p.

Knowing Google, I assumed they would be using Google Protocol Buffers to store this information, which would make it non-trivial to read without the protobuf definition file. Luckily, I was able to find CardboardDevice.proto in a GitHub repository. It even contains descriptions of the fields!

I used the protoc tool on the CardboardDevice.proto file to generate Python code to decode the viewer profile, then dumped the information into my notebook:

/tmp$ mkdir pyout

/tmp$ protoc --python_out=pyout CardboardDevice.proto

/tmp$ cd pyout/

/tmp/pyout$ python3

>>> import CardboardDevice_pb2

>>> profile = base64.decodebytes(b"[… insert base64-encoded profile here …]")

>>> devparams = CardboardDevice_pb2.DeviceParams()

>>> devparams.ParseFromString(profile)

>>> devparams

[… profile values were dumped here …]

Development🔗

At this point, I had an inkling that I would need to write a few crates:

rsvr_profile: Crate to parse profile definitions, which I decided to keep in RON (Rusty Object Notation) for now.rsvr_opengl: Crate to be used by OpenGL applications for stereoscopic rendering.rsvr_tweak: Interactive tool to tweak the profile definition in real-time until it feels 'right'. (I would need this because my viewer did not come with a profile of its own.)- (potentially in the future)

rsvr_vulkan: Crate to be used by Vulkan applications.

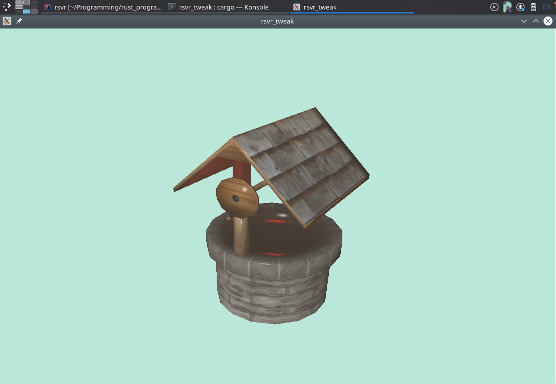

I decided that I would first start by writing rsvr_tweak to load and render Wavefront OBJ model files monoscopically. I found this well on OpenGameArt.org.

One More Eye🔗

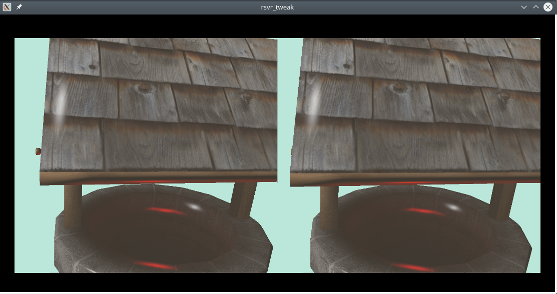

I implemented stereoscopic rendering and also added keybindings to change the viewer parameters on the fly.

Now, just get it on the phone...🔗

With rendering to a local window working, I now had to come up with some method of streaming the video to the phone (granted, it could be possible to run the VR applications on the phone, but I would expect a desktop GPU to far outperform that of a mobile phone!).

Taking into account my reluctance to write anything Android-specific, I decided it may be worth a shot to try an in-browser solution using WebRTC (and VP8). Luckily for me, GStreamer has grown a WebRTC implementation and I heard, from a FOSDEM 2018 talk, that GStreamer had some nice Rust bindings.

I was pleasantly surprised by GStreamer; the bindings are as well supported as they are made out to be! A few days later and I had something 'working' but frankly unusable. I only managed 32 fps (or 6.2 fps in debug mode), as measured on the computer. Unfortunately, frames were being dropped more than they were transmitted and the latency would be on the order of 10 seconds or more... Not a good result!

Using the excellent gst-launch-1.0 tool, I played around and found that hardware encoding gave a much lower latency. My graphics chip doesn't support hardware VP8 encoding but does support hardware H.264 encoding, so this may be an avenue that I need to explore. Still, I'm not sure if this will solve the issues because even the software vp8enc encoder (with deadline=1) performs markedly better than the results I've been getting!

For the future🔗

This project is far from its conclusion; I would definitely like to see it become usable. When I get time to work on it again, I will potentially look into the following:

- Having GStreamer perform the GL texture download using the

gldownloadelement- or otherwise optimising the texture download process.

- Hardware video encoding for lower latency.

- Replacing WebRTC with GStreamer's UDP sink & source elements, if this proves advantageous.

- Cleaning up the Rust crates – right now, they are very messy!

- I find it hard to build abstractions in Rust where lifetimes get involved.

- Running RSVR applications directly on a mobile phone.

- Under Android

- Under Halium (as long as GPU hardware acceleration is available)

I hope to follow up this blog post in the future.